Effective Naming Conventions in TestMonitor: Best Practices

It may seem like a minor element of software testing, but consistent and clear naming conventions play a key role in organizing complex test elements, easing collaboration, and simplifying traceability.

However, we recognize that developing that structure can often be easier said than done: This Academy Article aims to give you the best practices your team can use for the various elements in TestMonitor, including requirements, test cases, milestones, test runs, and issues.

Here’s how:

Why Naming Conventions Matter

Having consistent and clear naming conventions is essential for:

- Improving the organization of testing elements and requirements and the ease of navigation.

- Enhancing collaboration among team members by providing a known structure across development focus areas.

- Facilitating traceability throughout the testing lifecycle.

- Reducing misunderstandings and errors.

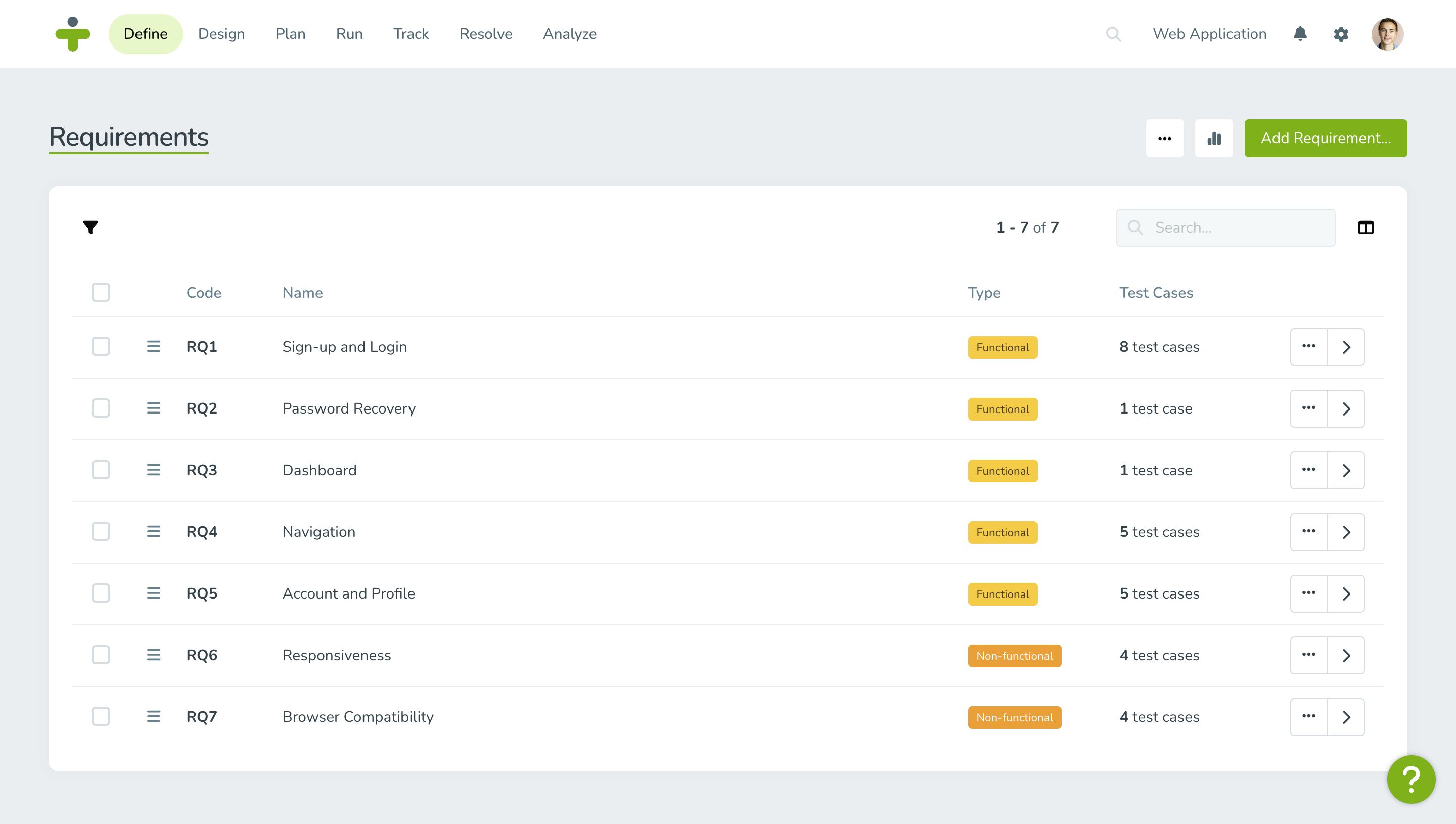

Naming Requirements

While every team can develop their own structure, here are some best practices:

- Use consistent naming patterns to facilitate quick identification of related items.

- Include identifiers or categories at the beginning of the name to differentiate between types of requirements. This will help team members quickly locate and identify the nature of each requirement.

- Use descriptive and unique names to help convey the purpose or functionality of each requirement without needing to read the details.

- Name requirements in a way that makes them easily linkable to corresponding test cases. This makes it simpler to track which requirements have corresponding test cases and which have been verified through testing.

An example that reflects each of these points could be: "RQ1: User Authentication."

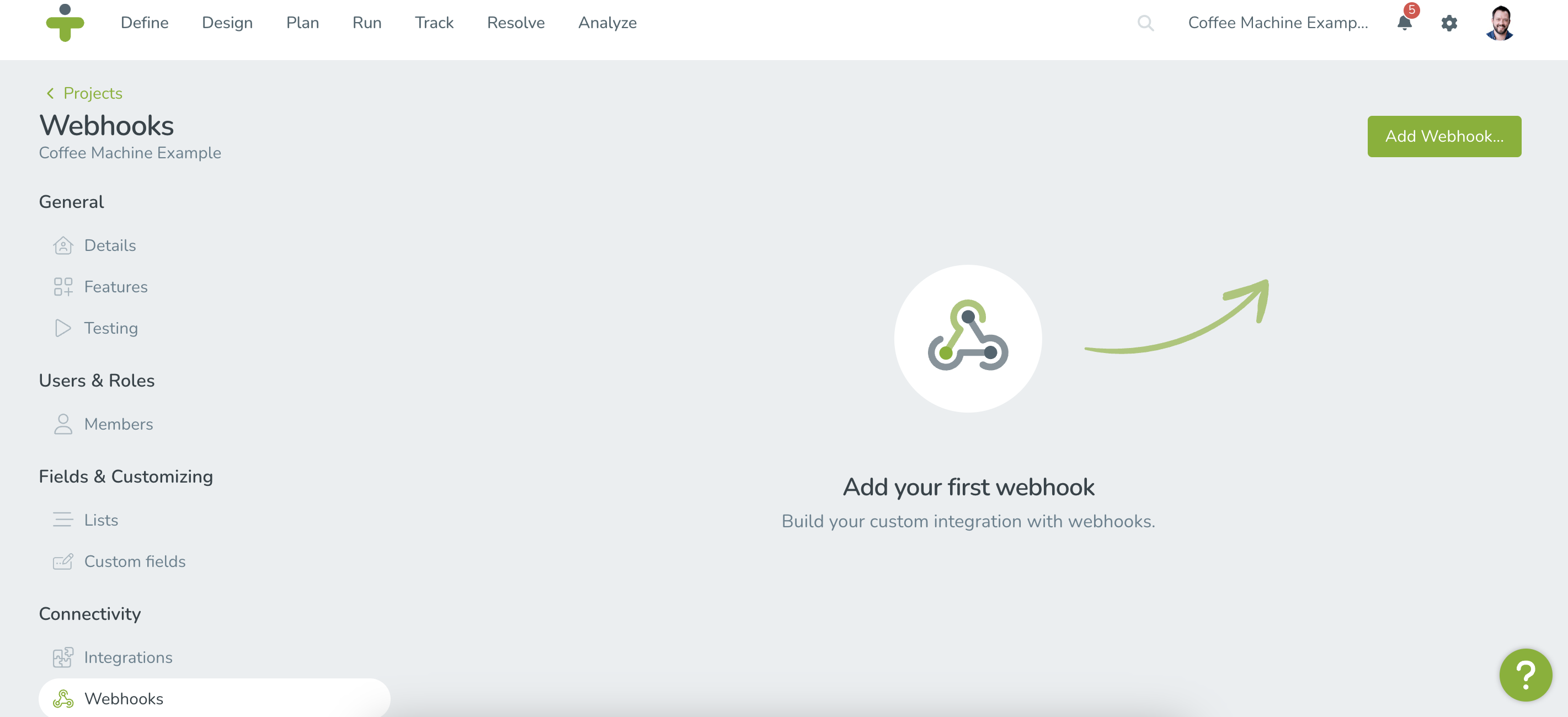

Note: TestMonitor creates Requirement, Test Case, and Issue naming codes for you when these items are added.

Naming Test Cases

Similar to naming requirements, test case names should clearly reflect their purpose and scope.

Related: How to Write Effective Test Case Names with Examples >>

To start, implement a system of prefixes or keywords to group related test cases. You can even go a step further by using folders to group related text cases.

From there, ensure the naming convention is consistent across all test cases.

Examples include:

- TC1: Login - Successful User Login

- TC2: Register Account - Valid Credentials Registration

- TC3: Update Profile Information - Partial Fields Submission

- TC4: Search Functionality - Multiple Keywords Query

- TC5: Payment Process - Successful Transaction Completion

- TC6: Forgot Password - Correct Recovery Method Execution

Naming Milestones and Test Runs

Naming Milestones should reflect the key project phases or key deliverables.

For example: “Milestone 1: Initial Feature Set”.

Similarly, for Test Runs, create names that indicate the purpose, scope, and associated milestones. This could look like: “Sprint 1 Test Run—Regression Testing”.

Naming Issues

By now, you’re probably noticing a pattern: Here, we recommend using a combination of a unique identifier as a prefix followed by the focus area of the issue and then a brief description of the bug, such as: “I29: Login - Error on Password Reset".

Related: How to Write A Bug Report That Resolves Issues Effectively >>

General Best Practices

Although it can take some time to integrate naming conventions like these, the efficiency benefits are well worth the effort. Once they are in place, we recommend:

- Adhering to naming conventions across all project elements for coherence.

- Establishing a centralized reference for naming guidelines to ensure uniform adoption.

- Encouraging teams to document and share their naming conventions.

- Regularly reviewing and updating naming standards to accommodate changing project needs.

Table of Contents

You May Also Like

These Related Stories

Maximizing Productivity with TestMonitor Integrations

Glossary of Terms in Software Testing and the TestMonitor Platform